If you want to set up a connected home, you’ve got two options. You can buy a bunch of smart gadgets that may or may not communicate with other smart gadgets. Or you can retrofit all of your appliances with sensor tags, creating a slapdash network. The first is expensive. The second is a hassle. Before long, though, you might have a third choice: One simple device that plugs into an electrical outlet and connects everything in the room.

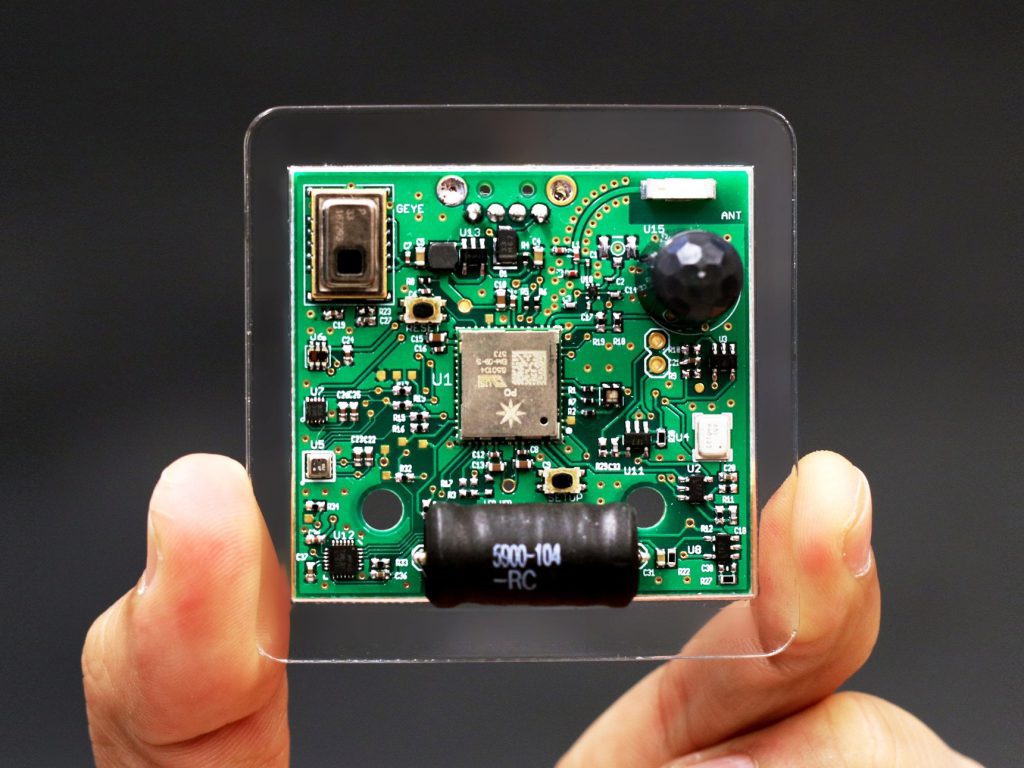

That’s the idea behind Synthetic Sensors, a Carnegie Mellon University project that promises to make creating a smart, context-aware home a snap. The tiny device, unveiled this week at the big ACM CHI computer interaction conference, can capture all of the the environmental data needed to transform a wide variety of ordinary household objects into smart devices. It’s a prototype for now, but as a proof of concept it’s damn impressive.

Plug the module into an electrical outlet and it becomes the eyes and ears of the room, its 10 embedded sensors logging information like sound, humidity, electromagnetic noise, motion, and light (the researchers excluded a camera for privacy reasons). Machine learning algorithms translate that data into context-specific information about what’s happening in the room. Synthetic Sensors can tell you, for example, if you forgot to turn off the oven, how much water your leaky faucet is wasting, or whether your roommate is swiping your snacks.

Researchers have long explored the concept of ubiquitous sensing, but it’s only just started making its way into homes with products from Nest, Sen.se, and Notion. Like those companies, the CMU researchers hope to connect otherwise unconnected devices, but go a step beyond that by packing several sensing functions into one device. It’s like a universal remote for connected homes. “Our initial question was, can you actually sense all these things from a single point?” says lead researcher Gierad Laput.

Yes, they could. In fact, sensors have gotten so small and sophisticated that gathering the data wasn’t hard. The challenge was doing something with it. Laput figured he could use it to answer questions people had about their environments (How much water do I use each month?) or do things like monitor their home security. But first he needed to translate that data into relevant information. “The average user doesn’t care about a spectrogram of EMI emissions from their coffee maker,” he says. “They want to know when their coffee is brewed.”

Using data captured by the sensor module, the researchers assign each object or action a unique signature. Opening the fridge, for example, produces a wealth of data: You hear the creak, see the light, and feel the movement. To a suite of sensors, it looks and sounds very different from a running faucet, which produces its own data. Laput and his team trained machine learning algorithms to recognize these signatures, building a vast library of senseable objects and actions. The variety of sensors is key. “These are all inferences from the data,” says Irfan Essa, director of Georgia Tech’s Interdisciplinary Research Center for Machine Learning. “If you had just one sensor, it would be much harder to distinguish.”

Laput says the technology can identify different activities and devices simultaneously, though not without issues. “Doing this type of machine learning across a bunch of different sensor feeds and making it truly reliable under a bunch of different circumstances is a pretty tough problem,” says Anthony Rowe, a CMU researcher working in sensor technology. By that he means human environments are complex. A truly useful universal sensor must recognize and understand the nuances of constantly changing inputs. For example, it should be able to discern your coffeemaker from your blender, even if you move the appliance from one counter to another. Likewise, adding a new appliance to your kitchen can’t derail the whole system. Ensuring that level of robustness is a matter of improving the machine learning, which could fall to the system’s end user. “The easy solution in the short term is coming up with an interface that makes it easier for users to point out problems and retrain the system,” Rowe says.

That’s hard to do with CMU’s current prototype. Though the technology is solid, the interface remains practically nonexistent. Laput says he might eventually build an app to control the system, but the bigger idea is to incorporate Synthetic Sensor technology into smart home hubs as a way to capture more fine-grained data without the need for a camera (cough, Alexa). “If you embed more sensors into Alexa, you’ll potentially have a more knowledgable Alexa,” he says, referring to Amazon’s digital assistant. And that, Laput says, is the end goal of a smart home: building an environment that knows more about itself than you do.