Thanks to Amazon, your living room might soon become much more than a place to sit and converse, entertain, or enjoy your home entertainment center. That’s because the United States Patent and Trademark Office (USPTO) recently granted the electronic commerce and cloud computing company two patents designed to create augmented reality in a living room.

Augmented reality enables users to interact between real-world objects and virtual, computer-generated objects in a room, building, or other type of space. Interaction can take several forms, with the most common being motion, voice and gestures.

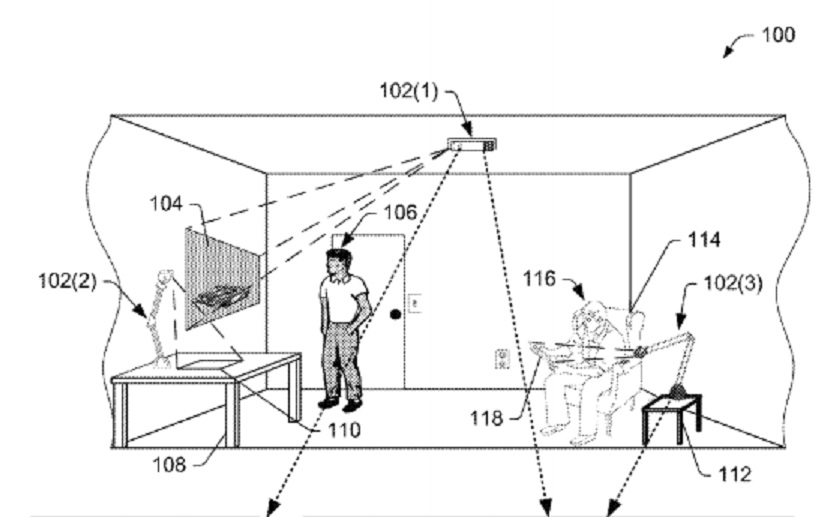

The first patent is for a reflector-based depth mapping of a scene, as shown in the above image from Amazon’s patent filing.

Inventors Eric G. Marason, Christopher D. Coley and William Thomas Weatherford explain that the invention uses systems and processes to determine the depth of a scene, as well as objects in the scene, by using a light source, a reflector, a shutter mechanism and a camera.

According to the description in the patent application, a single light source is directed toward the scene and the reflector. A camera captures the single light source, plus the reflected light. The light source can capture several objects within a room scene, such as furniture, a table lamp, or books.

A comparison of the two light sources provides the depth of any object within the scene to generate a 3D representation of that object or scene.

The detailed description section of the patent application explains that augmented reality environments can be formed using cameras, projectors and computing devices with processing and memory capabilities. The equipment can be positioned anywhere within a room, such as mounted to the ceiling or a wall, or placed under a table.

Each environment is known as an augmented reality functional node (ARFN), and there can be multiple ARFNs within a room. The ARFNs allow users to interact with physical and virtual objects in the environment.

The second patent is for object tracking in a three-dimensional environment. Inventors Samuel Henry Chang and Ning Yao describe the invention as systems and techniques to track objects, motions and gestures by capturing their outlines using sensors, such as a camera, over time to create a series of 3D images.

The movement of these 3D images can be inputted into an augmented reality system so they can interact with the ARFN within that environment.

Together, the technologies in both patents would create and project an augmented reality representation into a room and track user input based on gestures used in the augmented reality environment. In doing so, the user’s gestures can manipulate the augmented reality.

Both inventions are part of Amazon’s subsidiary, Lab126, a research and development company that designs and engineers consumer electronic devices. Lab126 is responsible for the most recent creation, the Amazon Echo.

Augmented reality inventions are becoming commonplace with Amazon. Earlier this year, the company was granted a patent for a head-mounted display in which tablet users can toggle from opaque to transparent.

Amazon has not shared any plans for its new inventions or given any indication as to the type of device it would develop and sell to consumers.